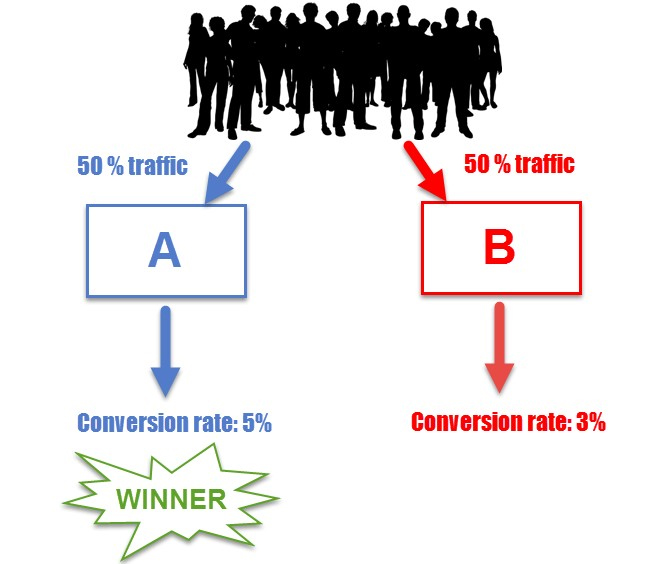

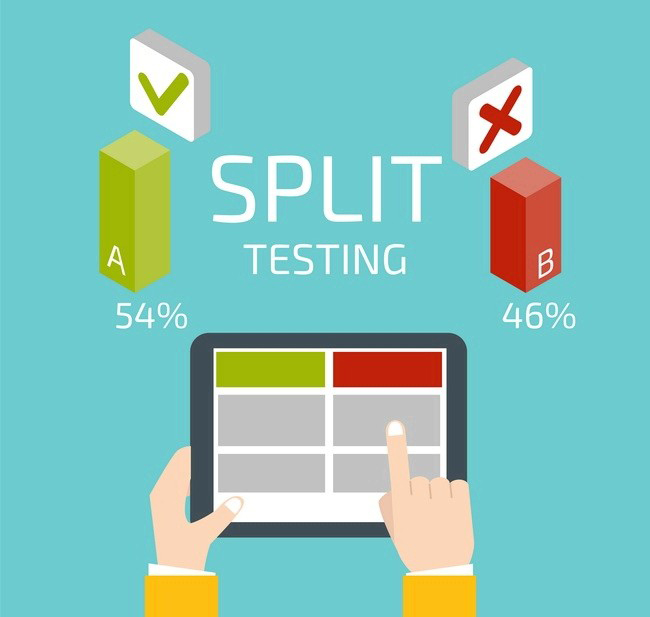

A / B testing (also called split testing) is a process in which two versions (A and B) will be compared in a defined environment / situation and thereby evaluate the session Which version is more effective. The version here can be anything from a banner, website, advertising template to email and efficiency is judged based on the goal of the test maker for these versions.

What is A/B testing?

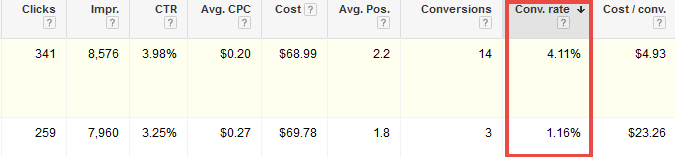

A sales website has the goal of wanting customers to buy goods or buy more. An advertising banner has the goal of wanting customers to click on it more. An email has the goal that customers have to open more view. Everything has a goal, in order to make the customer perform a desired action, this action is called conversion. The rate of people doing those actions is called conversion rate.

And measuring and evaluating two versions A and B is also the measurement and evaluation of the conversion rate of the ongoing process.

Why do I need to do A / B testing?

If you have a certain number of customers and you want to increase the amount of conversion, the first way is to bring more customers to the website or store. The second way is to increase conversion rate so that with the same amount of guests available, they generate a greater amount of conversion. A / B testing helps you do the second thing by enabling the efficiency of the processes you are working on whether it is web development, application development, advertising or sales.

The cost of adding customers in the same way is usually not small, while the cost of A / B testing is sometimes not much and the changes sometimes even small can still bring about great efficiency in creating more conversion.

A / B testing process

In order to perform a proper A / B testing process (or any other testing process), it is necessary to follow the normal scientific method, including the following steps:

1. Ask questions:

It is necessary to ask the question to guide the orientation and objectives for the A / B testing process and it is clear to know what the results will be after testing. The questions can be kind of: "how to reduce the bounce rate for a landing page?" Or "how to increase the number of people signing up for the form on the homepage?" Or "how to improve CTR?" of banner ads? ".

2. Research overview:

It is important to understand and understand the behavior of customers when they perform conversions using the measurement tools for each channel, the website can be Google Analytics, and Email can be email clients, social social listening tools.

3. Set a hypothesis:

With the question above and what you know about customer behavior when making conversions, try to come up with a hypothesis about how to solve the above question. "There is a link to the tutorial page below the footer that can reduce the bounce rate", "making the registration button more prominent will increase the number of subscribers" or "banner with a beautiful girl image will have CTR higher ”are examples of hypotheses for the questions mentioned above.

4. Determine the test sample and test execution time:

The next step is that you need to determine the number of customers that will be conducted A / B testing. The number of samples must be large enough to see the difference between the A / B versions clearly after the test. The test time should also be reasonably determined to ensure that the results are not affected by seasonal factors, external impacts that change the needs and behavior of customers. You can try the estimation tool to calculate the test run time.

5. Conduct test:

Create a new version B to test with the original version A. This B version uses the hypothesis you have set up (with links below footer, more prominent registration button, beautiful girl-shaped banner) and Will be measured for conversion rate with version A.

6. Collect information and conduct analysis:

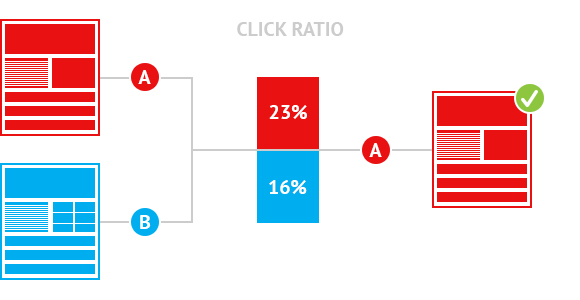

If after A / B testing process and you see that version B brings a higher conversion rate than version A (reduced bounce rate, increased subscriber, increased CTR), then version B is more effective. But if the conversion rate is lower or unchanged, then the hypothesis to solve your problem is not correct. Now go back to step 3 and find a new hypothesis to continue.

7. Provide results for all stakeholders:

Sending information and insights found after testing for relevant departments (programming, UI / UX design, optimization team, etc.). Replace version A with version B if B is actually more efficient after considering all possible possibilities if replaced.

Repeat this test procedure from the beginning to solve a question, another problem.

Application of A / B testing

With A / B testing you can apply and improve a lot for the process of web development and development, online / offline advertising, mobile apps and email marketing.

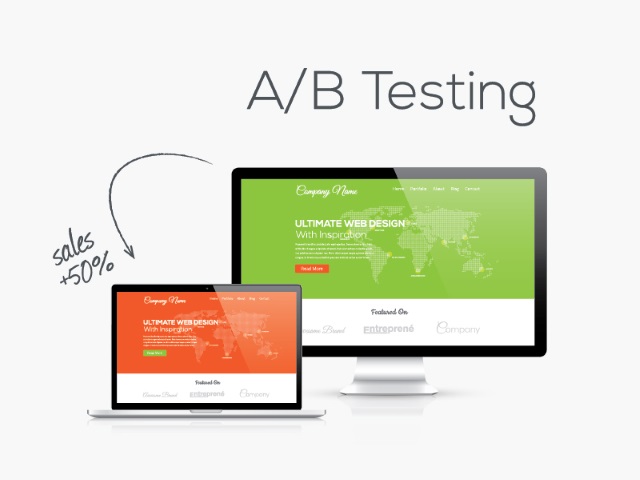

1. For website

Mostly related to web interface and user experience (UI / UX) because these are factors that directly affect whether users can perform conversions on the website. With a website you can A / B test all the factors that can affect user behavior such as images, titles, content, call to action, form fill information, etc. Test In turn each element that you feel can improve to increase conversion rate.

2. For advertising and sales

For the online segment, A / B testing is often used to measure the effectiveness of different advertising patterns. For example, when you write an Adwords ad copy for the same group of keywords (ad group), you should always write 2 different ads and run parallel to know which ads are more effective after a running time. Similar to GDN or Facebook ads, use different ad designs for the same campaign to measure effectiveness and then choose which designs are more effective to continue. Optimizing your ad regularly by testing different options will help you continually improve your conversion rate and help your ads run more effectively.

For the offline array, A / B testing can often be used to evaluate the effectiveness of advertising channels such as paper newspapers, leaflets, billboards ... For example, by using different coupon codes for each ad sample. newspaper, loose leaflet, or billboard, advertisers can capture which ads are more effective by having more people use the couple code. Other cases may use different phone numbers to replace coupon codes.

Another application in sales at stores may include placing products in different locations to measure customer attention and eventually making them buy more. This shows that the applicability of A / B testing is very diverse and very flexible depending on the objectives set.

3. For mobile applications

A / B testing is also applied in developing mobile applications and similar to websites, mainly to improve UI / UX of products. For mobile applications, conducting testing is often more difficult both technically and in user behavior. Technically, to test, the application version needs to be updated, approved by the AppStore or Google Play and then come to the user so it takes more time. In terms of user behavior, not everyone will update the new version immediately and the mobile user experience is completely different than on the web.

Currently there are many tools to support A / B testing for mobile applications in the market, personally, I had the opportunity to try 2 tools: Splitforce and Apptimize and both are quite good. You can also refer to the list of 20 A / B testing tools for mobile phones .

4. For email marketing

Gone are the times when pushing hundreds of thousands of emails and thinking that users will read their emails. Email clients have more and more sophisticated filters, sending all spam emails into the trash and even so customers are still buried by dozens or even hundreds of emails every day. The important thing is how to let customers open their emails to see and interact with those emails. The main answer is A / B testing. You wonder if any title will appeal to readers to increase open rate, please test. You don't know which CTA to use to get users to click on the link.

Currently most of the tools send email like MailChimp , Benchmark Email , have the feature to allow A / B to test the content sent to be able to measure the effectiveness of the sent campaign.

Tips for conducting A / B testing

1. SHOULD

Knowing when to test when to stop: stop too early, you will lose valuable parameters to make the right decision. Running the test for too long is also harmful because if the trial version has too bad performance, it can also affect your conversion rate and total sales.

Keeping the consistency: when conducting A / B testing, there needs to be a way to remember which users have selected the test version to always display that version to avoid affecting the user experience. . If a button is changed to test and this button appears in many places on the website, customers must also see this button the same everywhere on the website. Cookies are the most common method.

Test many times: the fact that not all A / B testing will bring the results you want or help you find a solution to the problem. So keep testing more and more, in different directions. If each test improves your conversion rate a bit, then many such tests will add up to create a bigger effect.

Note the difference between traffic from mobile and desktop: customers who access mobile websites and from desktops may appear completely different on your website depending on whether the design, UI / UX and website are mobile-friendly. . Therefore it is important to keep in mind the division of traffic when A / B testing the website, it is best to test for mobile and own traffic desktop.

2. DO NOT

Testing does not guarantee the same conditions: remember that testing both versions A and B must be done in parallel. You cannot run version A in week 1 and version B in week 2 thinks that will give the right result.

Conclusion is too early: remember that results are only valid when they have a relative numerical value and a corresponding time to determine. You cannot decide that version A is more than B or vice versa when they only differ a few conversions or the test time is too short.

Surprising old customers: best to do A / B testing, only focus on new customers because if old customers come in and see everything different than before, maybe they will Surprisingly and this affects conversion rates, especially if you are not sure whether the test version will be selected or not.

In order to give a hunch to dominate the results: sometimes the test results may be completely contrary to what you might think of. It is possible that a red CTA on a green background is blinding and uncomfortable, but the results can prove that it is more effective. What you need is conversion rate, don't let your hunch resist the test results

Post a Comment

Post a Comment